Data science has not remained just a field of computing and scientific research. In the internet-connected world, data science, machine learning and artificial intelligence are much more applicable than ever imagined. Without a doubt, the first leap forward in the practical applications of machine learning and artificial intelligence came when business websites, including social media platforms, e-commerce portals and video streaming websites, realized that they needed meaningful insights from data and user behavior. This is when big technology companies started using ML and AI in their web servers and cloud infrastructures. Artificial intelligence has consumed a lot of computing resources and servers barely have enough computing resources to run AI-backed applications 24/7.

Then smartphones became a global phenomenon, and the concept of applying artificial intelligence to cutting-edge devices got its start. Even smartphones had enough hardware resources to run machine learning models. These pocket computers are charged regularly as they have this type of usage. Alphabet Inc.'s (Google) Android and Apple's iOS have emerged as the most popular mobile operating systems worldwide. Several applications were developed for these mobile operating systems that inherently used machine learning algorithms.

Then smartphones became a global phenomenon, and the concept of applying artificial intelligence to cutting-edge devices got its start. Even smartphones had enough hardware resources to run machine learning models. These pocket computers are charged regularly as they have this type of usage. Alphabet Inc.'s (Google) Android and Apple's iOS have emerged as the most popular mobile operating systems worldwide. Several applications were developed for these mobile operating systems that inherently used machine learning algorithms.

At the same time, Google was also working on an internal project to develop a machine learning framework that could run on low-power, resource-constrained, low-bandwidth edge devices having 32-bit microcontrollers or digital signal processors at its heart. The result of this project is TensorFlow Lite.

Tensorflow Lite introduced the concept of tiny machine learning or TinyML. TinyML is a term coined by Pete Warden, TensorFlow Lite engineering lead at Google. In general terms, TinyML refers to the application of machine learning in a small footprint of a few kilobytes on embedded platforms that have ultra-low power consumption, high latency (internet), limited RAM and flash memory. Currently, TensorFlow Lite is synonymous with TinyML as there is no other machine learning framework for microcontrollers. TensorFlow Lite is designed to run on Android, iOS, Embedded Linux and microcontrollers. Currently, the only reference for TinyML is Pete Warden and Daniel Situnayake's book “TinyML: Machine Learning with TensorFlow Lite on Arduino and Ultra-Low-Power Microcontrollers” published in 2020 and the Tensorflow Lite documentation.

What is TinyML?

Currently, we can define TinyML as a subfield of machine learning that applies machine learning and deep learning models to embedded systems running on microcontrollers, digital signal processors or other specialized ultra-low power processors. Technically, these embedded systems must have a power consumption of less than 1 mW so that they can operate for weeks, months or even years without recharging or replacing the battery.

Often these embedded systems are IoT devices that remain connected to the Internet. TinyML-enabled embedded devices are designed to run some machine learning algorithm for a specific task – usually a part of edge computing within the device.

Often these embedded systems are IoT devices that remain connected to the Internet. TinyML-enabled embedded devices are designed to run some machine learning algorithm for a specific task – usually a part of edge computing within the device.

A TinyML machine learning model running on the system is typically a few kilobytes in size and performs a specific cognitive function, such as recognizing a wake word, identifying people or objects, or gaining insights from data from a specific sensor. With the TinyML machine learning model running 24/7, the device should still have power consumption in the milliwatt or microwatt range. The following features characterize a TinyML application:

- It runs on a microcontroller, digital signal processor, low-power microcomputer hosting embedded Linux, or a mobile platform with explicitly limited RAM, flash memory, and battery power to implement a machine learning model.

- It runs on ultra-low power devices with power consumption in mW or µW while deriving inferences from the machine learning model running 24/7.

- It must be implementing machine learning at the edge of the network, therefore not requiring data transfer or exchange with the cloud or server, that is, the machine learning model must be executed within the edge device without any need for data communication over the network. network. The network used by the device should only be used to communicate machine learning model inference results to a server/cloud/controller if necessary.

- The TinyML machine learning model must take up minimal space, typically a few tens of kilobytes, to run on microcontrollers and microcomputers.

How TinyML works

TensorFlow Lite is the only machine learning framework that runs on microcontrollers and microcomputers. TensorFlow Lite is actually a deep learning framework that uses recurrent neural networks (RNN) for machine learning.

The microcontroller interface captures data from sensors (such as microphones, cameras, or embedded sensors). This data is fed to a machine learning model trained on a cloud platform before being transferred to the microcontroller.

Training for these models is usually batch training in offline mode. The sensor data that must be used to learn and derive inferences is already determined according to the specific application. For example, if the model has to be trained to recognize a wake word, it is already designed to process a continuous stream of audio from a microphone. Data set selection, normalization, model underfitting or overfitting, regularization, data augmentation, training, validation and testing are all already done with the help of a cloud platform like Google Colab in the case of TensorFlow Lite. After offline batch training, a fully trained model is finally converted and ported to the microcontroller, microcomputer or digital signal processor.

Once ported to an embedded system, the model does not require further training. Instead, it only consumes real-time data from sensors or input devices and applies the model to that data. Therefore, a TinyML machine learning model needs to be highly robust and can be retrained after years or never retrained. All possibilities of underfitting and overfitting the model need to be checked for the model to remain relevant for a very long period of time or ideally indefinitely. That's why TinyML machine learning models usually go through a rigorous training, testing, and validation procedure before conversion. The relevance of the model largely depends on the selection of the correct and appropriate dataset and the appropriate normalization and regularization of the dataset.

Why TinyML?

TinyML began as an initiative to eradicate or reduce IoT's dependence on cloud platforms for simple, small-scale machine learning tasks. This required implementing machine learning models into the edge devices themselves. TinyML offers the following notable advantages:

- Small footprint: A TinyML machine learning model is just a few tens of kilobytes in size. This can be easily ported to any microcontroller, DSP or microcomputer device.

- Low power consumption: Ideally, a TinyML application should consume less than 1 milliwatt of power. A device can continue deriving inferences from sensor data for months or years with such a small power consumption, even if a coin cell battery powers it.

- Low latency: A TinyML application does not require transferring or exchanging data over the network. All data from the sensors it works on is captured locally and an already trained model is applied to it to derive inferences. The result of inferences can be transferred to a server or cloud for recording or further processing, but data exchange is not necessary for the device to function. This reduces network latency and eradicates dependence on a cloud or server for machine learning tasks.

- Low bandwidth: Ideally, a TinyML application does not require communication with a cloud or server. Even if the Internet connection is used, it will be used for tasks other than machine learning. The internet connection can only communicate inferences to a cloud/server. This does not affect the main embedded task or the machine learning task performed by the edge device. Therefore, the device can maintain its full functionality even on low bandwidth or no internet.

- Privacy: Privacy is a significant issue in IoT. In TinyML applications, the machine learning task is performed locally without storing or transferring sensor/user data to a server/cloud. Therefore, these apps are safe to use even if they are connected to a network and do not have any privacy issues.

- Low cost: TinyML is designed to run on 32-bit microcontrollers or DSPs. These microcontrollers usually cost a few cents, and the entire embedded system built from them costs less than $50. This is a very cost-effective solution for running small, large-scale machine learning applications, and is particularly useful when machine learning needs be applied in IoT applications.

How to begin?

To get started with TinyML on TensorFlow Lite, first of all, you need a compatible microcontroller board. The TensorFlow Lite for Microcontrollers library supports the following microcontrollers:

To get started with TinyML on TensorFlow Lite, first of all, you need a compatible microcontroller board. The TensorFlow Lite for Microcontrollers library supports the following microcontrollers:

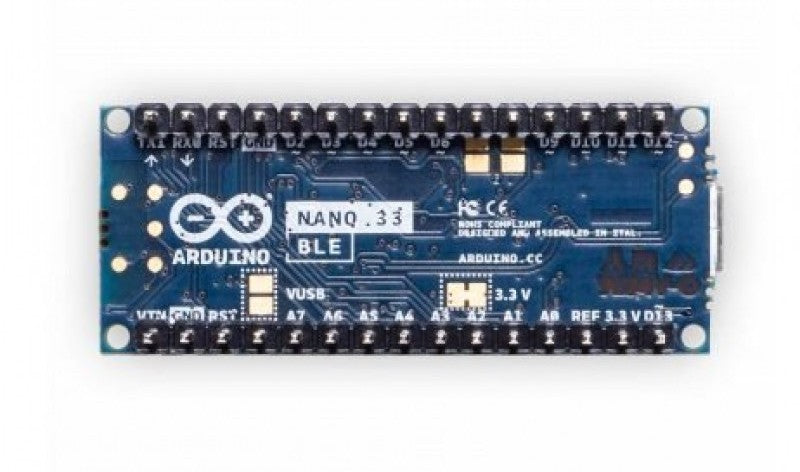

- Arduino Nano 33 BLE Sense

- SparkFun Edge

- STM32F746 Discovery Kit

- Adafruit EdgeBadge

- Adafruit TensorFlow Lite Kit for Microcontrollers

- Adafruit Circuit Bluefruit Children's Park

- Espressif ESP32-DevKitC

- Espressif ESP-EYE

- Wio Terminal: ATSAMD51

- Himax WE-I Plus EVB Endpoint AI Development Board

- Synopsys DesignWare ARC EM software development platform

- Sony Spresense

To run a machine learning model, 32-bit microcontrollers with sufficient flash memory, RAM, and clock frequency are required. The boards also come with multiple onboard sensors that can run any embedded application and apply machine learning models to the intended application.

In addition to a hardware platform, you need a laptop or computer to design a machine learning model. Different programming tools are available for each hardware platform that uses the TensorFlow Lite for Microcontrollers library to build, train, and port machine learning models. TensorFlow Lite is open source and can be used and modified without any license fees. To get started with TinyML using TensorFlow Lite, you just need one of the embedded hardware platforms listed above, a computer/laptop, a USB cable, a USB-to-serial converter — and a determination to learn machine learning with embedded systems.

TinyML Compatible Machine Learning Models

The TensorFlow Lite library for microcontrollers supports a limited subset of machine learning operations. These operations can be seen in all_ops_resolver.cc. TensorFlow Lite also hosts some example machine learning models in the following link that can be directly used for learning purposes or developing an application embedded with machine learning technology. These example models include image classification, object detection, pose estimation, speech recognition, gesture recognition, image segmentation, text classification, on-device recommendation, natural language question answering, digit classifier, data transfer. style, smart response, super-resolution, audio classification, reinforcement learning, optical character recognition, and on-device training.

TinyML Examples

In addition to the machine learning models hosted in TensorFlow Lite, the classic examples from the TensorFlow Lite library for microcontrollers – Hello World, Micro Speech, Magic Wand, and Person Detection – are good starting points for exploring TinyML. These examples can be accessed in the TensorFlow Lite documentation and in the TinyML book written by Pete Warden and Daniel Situnayake.

TinyML Applications

Although TinyML is still in its infancy, it has already found practical applications in many areas, including:

Industrial Automation: TinyML can be used to make manufacturing smarter, for example, using predictive machine maintenance and optimizing machine operations for greater productivity. TinyML can also improve machine performance to improve product quality and early detect flaws and imperfections in a manufacturing product.

Agriculture: TinyML can be used to detect diseases and pests in plants. Because TinyML operates independently of an internet connection, it can seamlessly implement automation and IoT on agricultural farms.

Health: TinyML is already in use for early detection of mosquito-borne diseases. It can also be used in fitness devices and health equipment.

Retail: TinyML can be used to automate inventory management in retail stores. A TinyML app can track items on store shelves and send an alert before they go out of stock with AI-enabled cameras. It can also help derive inferences about customer preferences in the retail sector.

Transportation: TinyML applications can be used to monitor traffic and detect traffic jams. This application can be combined with traffic light management to optimize traffic in real time. It can also be used for accident detection to make automatic alerts to the nearest trauma center.

Law enforcement: TinyML can be used to detect illegal activities such as riots and robberies using machine learning and gesture recognition. A similar application can also be used for bank ATM security. A TinyML model can predict whether the user is a genuine customer making a transaction or an intruder trying to hack or break the ATM by monitoring the user's activity.

Ocean Life Conservation: TinyML applications are already in use for real-time monitoring of whales in Vancouver and Seattle waterways to prevent whales from reaching busy waterways. Similar applications can monitor poaching, illegal mining and deforestation. TinyML devices can also be deployed to monitor the well-being of coral reefs.

Future of TinyML

With a small footprint, low power consumption, and no or limited dependency on internet connectivity, TinyML has enormous scope in the future where a large portion of narrow artificial intelligence will be implemented in edge devices or standalone embedded devices. TinyML will leverage IoT applications and make them more private and secure. Although currently TensorFlow Lite is the only machine learning framework for microcontrollers and microcomputers, some other similar frameworks such as uTensor and ARM's CMSIS-NN are under development. Although TensorFlow Lite is an open source project in development that is off to a great start with the team at Google, it still requires community initiative to become popular.

Conclusion

TinyML itself is a revolutionary idea that combines embedded systems with machine learning. The technology could emerge as an important subfield in machine learning and artificial intelligence as narrow AI peaks across multiple industries and domains. TinyML offers a solution to many problems currently faced by the IoT industry and experts applying machine learning in various domain-specific fields. The idea of using machine learning on edge devices with minimal computational footprint and power consumption could bring about a significant change in the way embedded systems and robots are designed. Current frameworks require more support from the community and chip designers. TinyML is destined to become popular as hardware and supported programming tools and libraries expand soon.